Objectivity and Trust

Slides: https://silver-choux.netlify.app

Philosophy, UCM

Disciplines and methods

Disciplines and methods

- Philosophy of science

- 2012: PhD

- Roles for values in science; science and society; esp. science-for-policy

- Case study method

- Computational STS

- 2015-17: AAAS Science & Technology Policy Fellow

- 2015-16: USEPA Chemical Safety for Sustainability

- Research portfolio evaluation -> Bibliometrics

- Text mining, online survey experiments

- 2015-17: AAAS Science & Technology Policy Fellow

Overview

- Objectivity

- Value-freedom …

- and a philosophical critique

- Trust

- Does trust require value-freedom?

- Empirical research: no

Objectivity

Objectivity: Four Basic Meanings

Objective occurrence:

public; available, observable, or accessible to multiple people

The plot of Greta Gerwig’s Barbie vs. the dream I had last nightObjective entity:

existing independently of us; not socially constructed

Pluto vs. moneyObjective proposition:

corresponds to the way the world really is; true

“Black Americans have a lower life expectancy than white Americans” vs.

“Ivermectin is an effective treatment for Covid-19”Objective researcher:

detached, disinterested, unbiased, impersonal, neutral among controversial points of view

The value-free ideal (VFI)

Social and political values should not influence the epistemic core of scientific research.

A challenge for VFI:

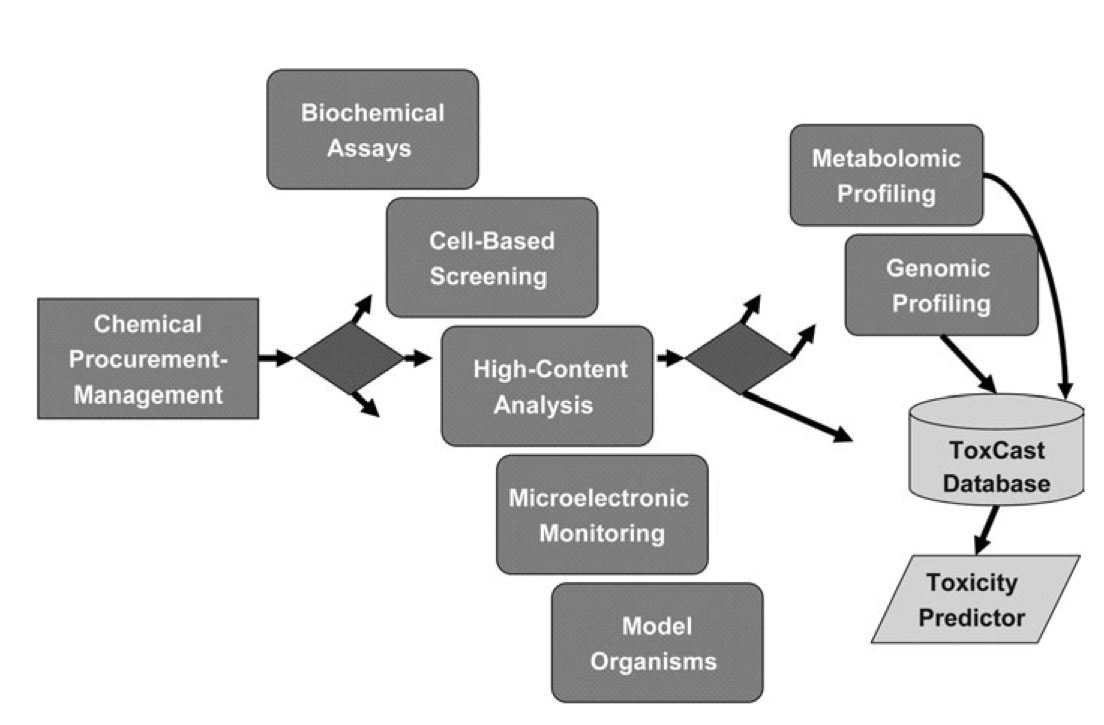

USEPA’s ToxCast Estrogen Receptor Model

Dix et al. (2007)

A challenge for VFI:

USEPA’s ToxCast Estrogen Receptor Model

Browne et al. (2015)

Researcher degrees of freedom in developing the ER model

- What endpoint to predict?

(ER binding; animal model pathology; human pathology; Plutynski 2017) - What sample of chemicals to use to train, test, and validate?

- What studies/data to use to establish ground truth? (Douglas 2000; vom Saal et al. 2024)

- Continuous or discretized model output?

- Where to set the active/inactive threshold? (Douglas 2000)

- How to classify chemicals close to that threshold? (Hicks 2018)

- How to calculate accuracy?

(sensitivity, specificity, F1, balanced accuracy, etc.)

- Is the ER model fit-for-purpose?

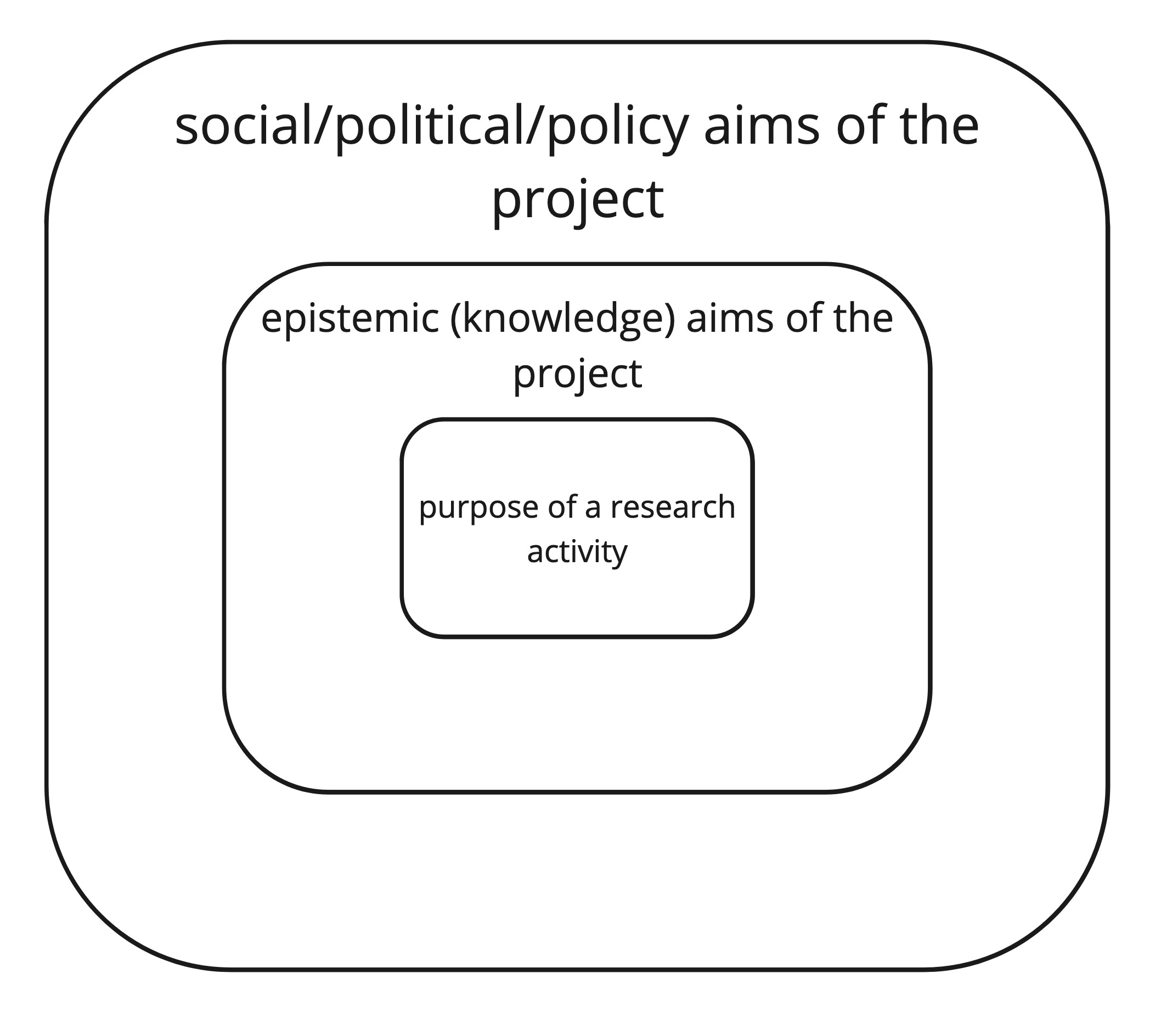

The social aims of science

Q: Why are you writing code in R?

A1: To fit a linear regression model.

Q: Why are you fitting a linear regression model?

A2: To understand how air pollution exposure is correlated with race.

Q: Why are you trying to understand how air pollution is correlated with race?

A3: To reduce racial disparities in asthma.(Hicks 2022; see also Hicks 2018; Elliott and McKaughan 2014; Potochnik 2017; Fernández Pinto and Hicks 2019; Lusk and Elliott 2022)

the objective of epidemiology … is to create knowledge relevant to improving population health and preventing unnecessary suffering, including eliminating health inequities (Krieger 2011, 31)

Objectivity on the ropes

- Scientists are taught that they need to be unbiased, disinterested, neutral in political and social controversies

- The value-free ideal is a widespread norm in academia and science-based policy

But

- Scientists are regularly confronted by researcher degrees of freedom

- Choosing among researcher degrees of freedom should be responsive to the social and political purposes of research

Trustworthiness

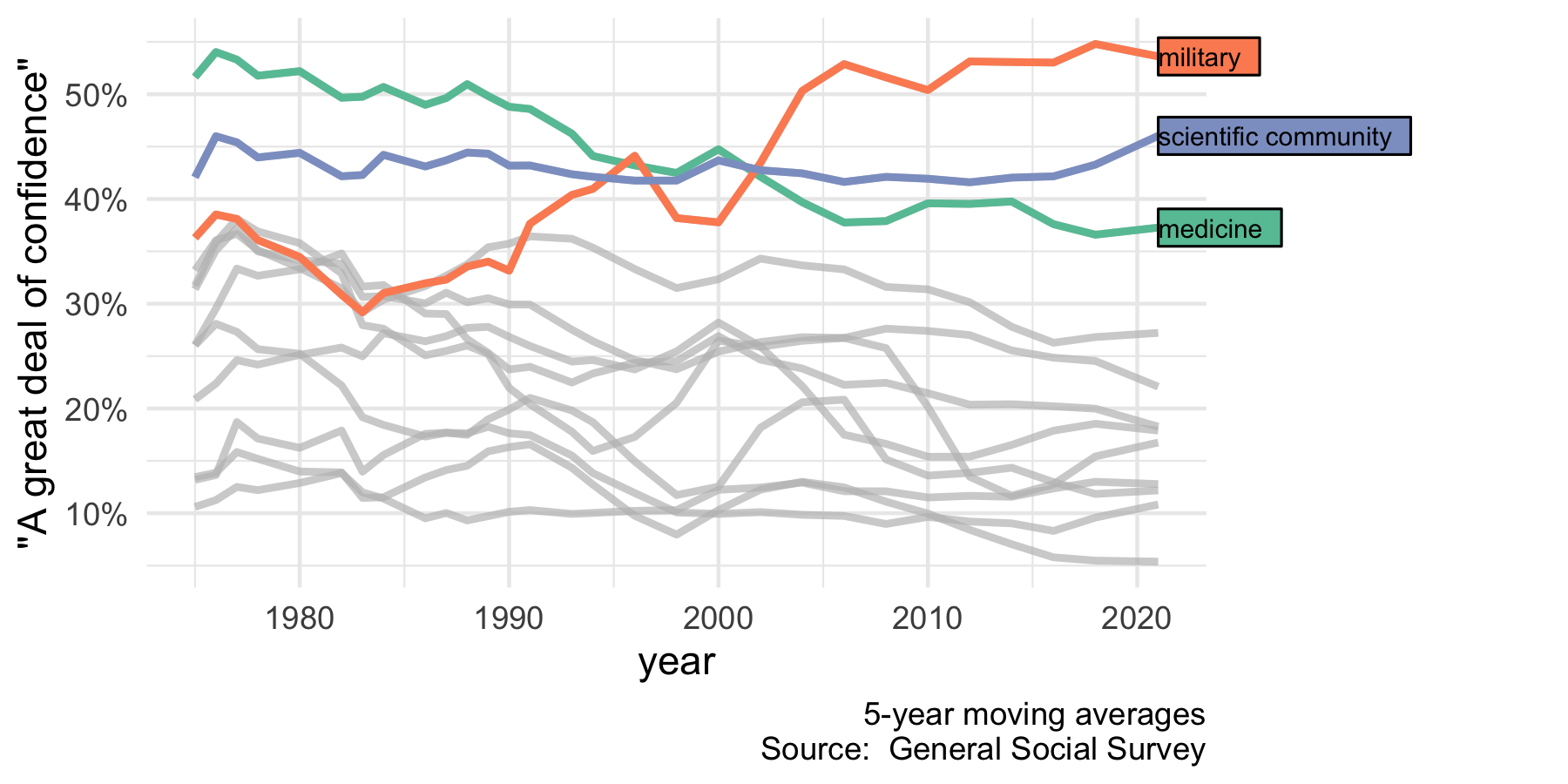

Trust in science is not low

(Compare Lupia et al. 2024; Većkalov et al. 2024)

Trust vs. trustworthiness

- trust

-

The occurrence:

Members of the public actually do trust the CDC on Covid-19 - trustworthiness

-

Whether it’s appropriate:

Should members of the public trust the CDC on Covid-19?

Objectivity and trustworthiness: Two models

- VFI-based

-

For science to be trustworthy, it needs to be value-free.

Bright (2017); Havstad and Brown (2017); John (2017); Kovaka (2021); Holman and Wilholt (2022); Menon and Stegenga (2023); Metzen (2024)

- Social aims of science

- For science to be trustworthy, it needs to promote appropriate social aims.

VFI-based trust in the wild

Nichols (2024); compare Lupia (2023) and contrast https://tinyurl.com/3wnjfthp

Riley Spence and BPA

Hicks and Lobato (2022)

Riley Spence and BPA

Re-analysis of data from Hicks and Lobato (2022)

- Disclosure of an inappropriate aim (economic growth) reduced perceived trustworthiness

- But disclosure of an appropriate aim (public health) had no effect

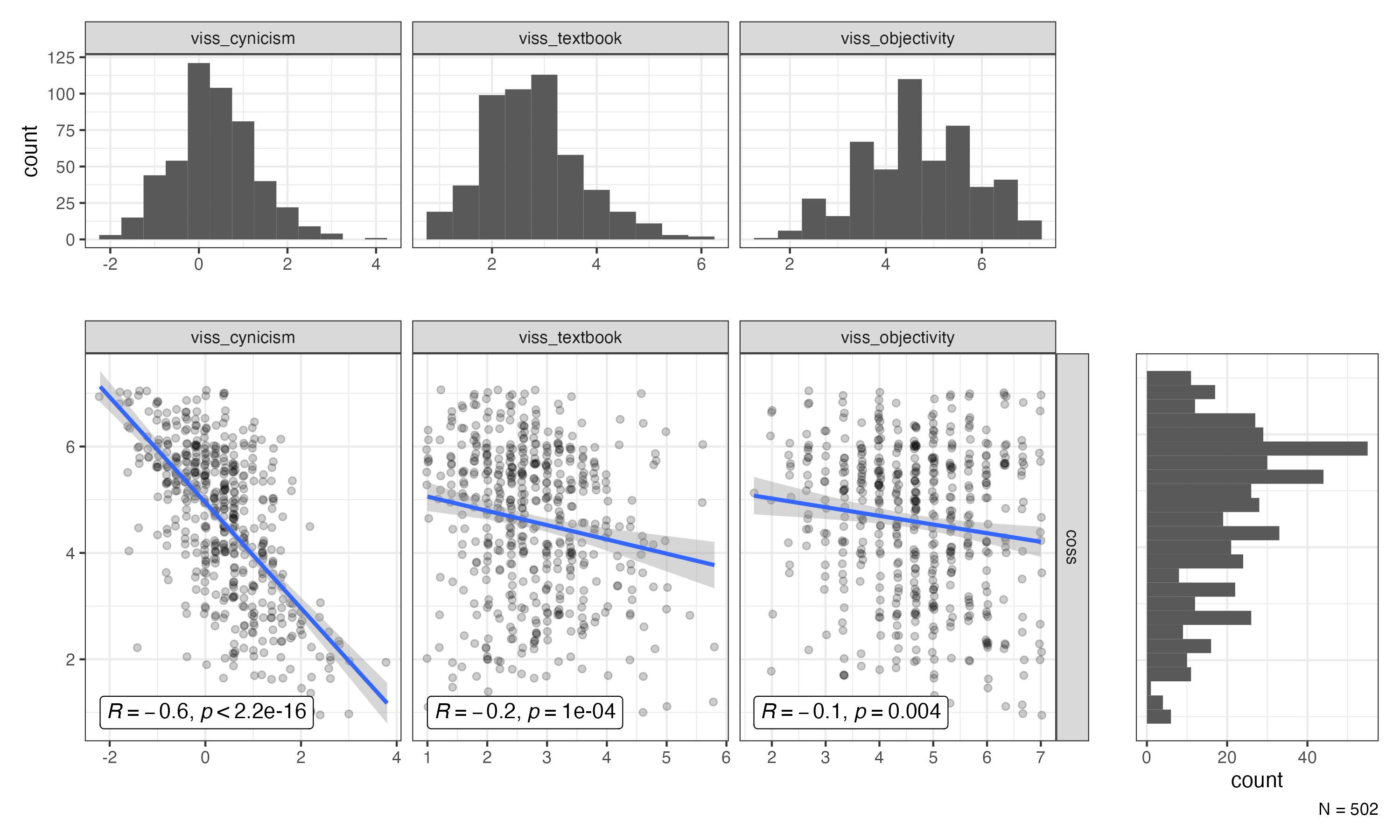

VFI and generalized trust

Hicks et al. under preparation B; item text

VFI and trustworthiness

- VFI-based model of trustworthiness is widely assumed, but untested

- In an experimental study, which values mattered, not whether values

- In comments on ST, confidence in science and EPA correlated with social aims for science

- In VISS development, cynicism, not VFI, predicts generalized trust

Conclusions

Conclusions

- A traditional understanding of objectivity — the value-free ideal — is a poor fit for actual scientific practice in fields like public health

- Trustworthiness — deserving the public’s trust — is more fundamental than the occurrence of trust

- Scientists might be hesitant to move away from value-freedom because of concerns about loss of trust(worthiness)

- But, in the limited empirical research so far,

social aims of science provide a better model for trustworthiness

References

Extra Slides

Epistemic interdependence

- The world is far too complex for any one individual to know and understand more than a tiny part.

- So we are epistemically interdependent:

We need other people to know things on our behalf. - Epistemic representatives are simply the people we entrust to know and understand things on our behalf.

- Often these people are scientists.

Trust: The 3-place relation

.jpg)

- \(X\) trusts \(Y\) to do \(Z\). (Baier 1986)

- Dan trusts the cat sitter to take care of Jag and Jasper.

- The public trusts public health experts to understand and communicate the risks of Covid-19.

Three dimensions of trustworthiness

Should \(X\) trust \(Y\) to do \(Z\)?

:::

- competence

-

Is \(Y\) capable of doing \(Z\)?

Are the public health experts appropriately trained in epidemiology, disease surveillance, immunology, etc.? - integrity

-

Will \(Y\) actually do \(Z\) the way they indicate that they will?

Are the public health experts honest? - responsiveness

-

Also called benevolence and good will. In doing \(Z\), will \(Y\) be responsive to \(X\)’s concerns and interest in having \(Z\) done?

Are the public health experts responsive to the public’s concerns and interests in understanding Covid-19 risks?

:::

(Hicks 2017; Goldenberg 2021; Almassi 2022;

compare Hendriks, Kienhues, and Bromme 2015)

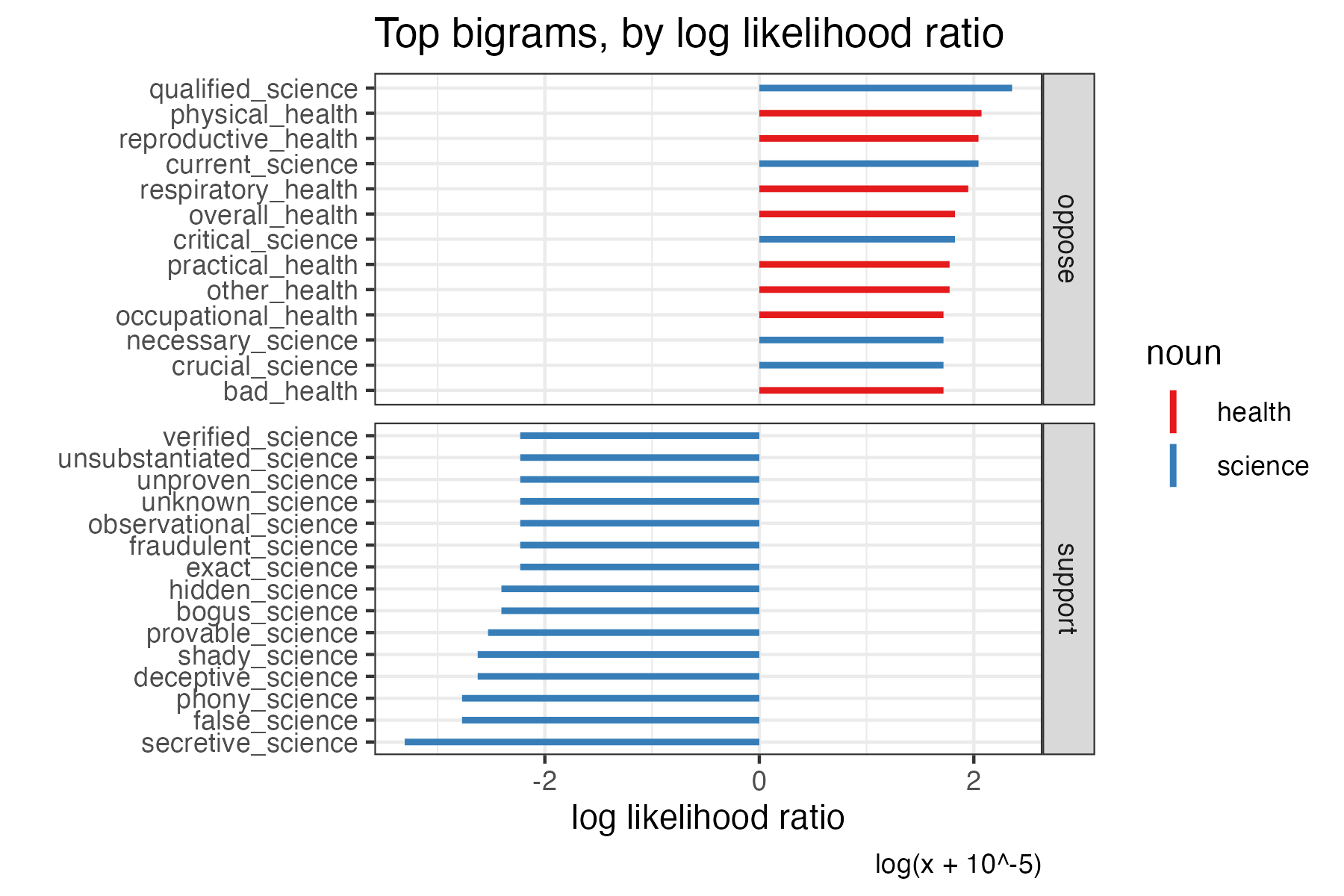

ST supporters and opponents

VISS subscales

| cynicism | |

| coi.1 | Scientists will report conclusions that they think will get them more funding even if the data does not fully support that conclusion. |

| coi.2 | Special interests can always find a scientist-for-hire who will support their point of view. |

| consensus.1 | The consensus of the scientific community is based on social status and prestige rather than evidence. |

| ir | Scientists should be more cautious about accepting a hypothesis when doing so could have serious social consequences. |

| objectivity | |

| nonsubj.1 | When analyzing data, scientists should let the data speak for itself rather than offering their own interpretation. |

| nonsubj.2 | Good scientific research is always free of assumptions and speculation. |

| vfi.1 | The evaluation and acceptance of scientific results must not be influenced by social and ethical values. |

| textbook | |

| consensus.2 | Scientists never disagree with each other about the answers to scientific questions. |

| fallible.1 | Once a scientific theory has been established, it is never changed. |

| pluralism.1 | Scientific investigations always require laboratory experiments. |

| pluralism.2 | All scientists use the same strict requirements for determining when empirical data confirms a tested hypothesis. |

Comments on Strengthening Transparency